Four engineering students juxtapose character recognition and text-to-speech technologies to help visually impaired students

Braille is the script used by the visually impaired. The pitfalls of the script are that not many books are printed in that form. There is just one fortnightly magazine in the country, but it does not reach the interiors.

The problem is that it is not cost effective to print novels or general knowledge books in Braille. In order to overcome this problem, four students of M S Ramaiah Institute of Technology — Abrar Ahmed, Anil L P, Harish Kumar B and Sadikul Amin — have come up with a device which can recognise printed text and reproduce the same in voice format, in a chosen language.

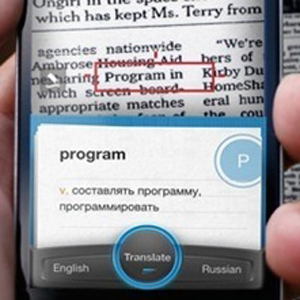

The students took up this project — named Eyes That Speak — following visits to schools for visually impaired persons in and around Bangalore. They were disappointed by the facilities provided. They came up with a device that juxtaposes the concepts of OCR (Optical Character Recognition) and text-to-speech. OCR is a field of research in artificial intelligence and computer vision. It allows computers to recognise alphabets without human intervention. It is crucial to the computerisation of printed text, which can be electronically searched, stored compactly, displayed online and used in processes such as translation, text-to-speech and text mining.

The goal of text-to-speech is automatic conversion of text to spoken form. This field of speech research has witnessed significant advances over the past decade with many systems able to generate output that closely resemble a natural voice.

Harish explained the working of their device. “A small web cam is used to capture an image of the printed text. The image is processed to make sure

that the text is clear before it is passed on to the OCR algorithm, which enhances the red-blue-green image and sends it to a specially designed application for processing. The application uses two steps. It analyses the text lines, breaks each word and recognises the language before giving the output through an audio device,” he said.

that the text is clear before it is passed on to the OCR algorithm, which enhances the red-blue-green image and sends it to a specially designed application for processing. The application uses two steps. It analyses the text lines, breaks each word and recognises the language before giving the output through an audio device,” he said.

Sadikul said, “The aim is to increase the accuracy of the OCR. We can satisfactorily say that our model can recognise even slightly tilted text.”

Anil said, “Our case study concluded that there were limited facilities for reading for the visually challenged. Eyes That Speak is aimed at providing easy solutions to the reading problems faced by visually impaired students, but it has more potential.”

“OCR is widely used in many other fields, including education, finance, and government agencies. Our model can also be used to process cheques in banks without human involvement. In order to save space and eliminate sifting through boxes of paper files, documents can be scanned and uploaded on computers,” added Abrar Ahmed.

source: http://www.bangaloremirror.com / Home> News> City> Story / Bangalore Mirror / by Purushotam Rao and Jinil M / Sunday, May 27th, 2012